regularization machine learning meaning

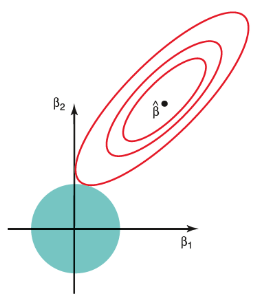

This is where regularization comes into the picture which shrinks or regularizes these learned estimates towards zero by adding a loss function with optimizing parameters to make a model that can predict the accurate value of Y. In simple terms regularization.

L2 Regularisation Maths L2 Regularization Is One Of The Most By Rahul Jain Medium

In simple words regularization discourages learning a more complex or flexible model to.

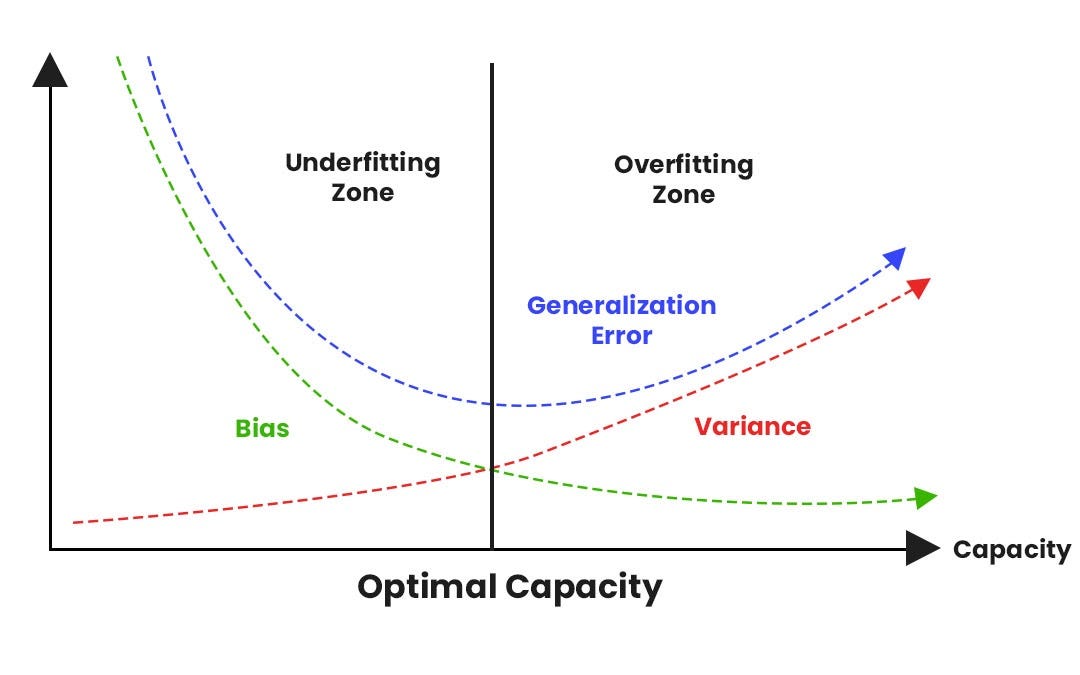

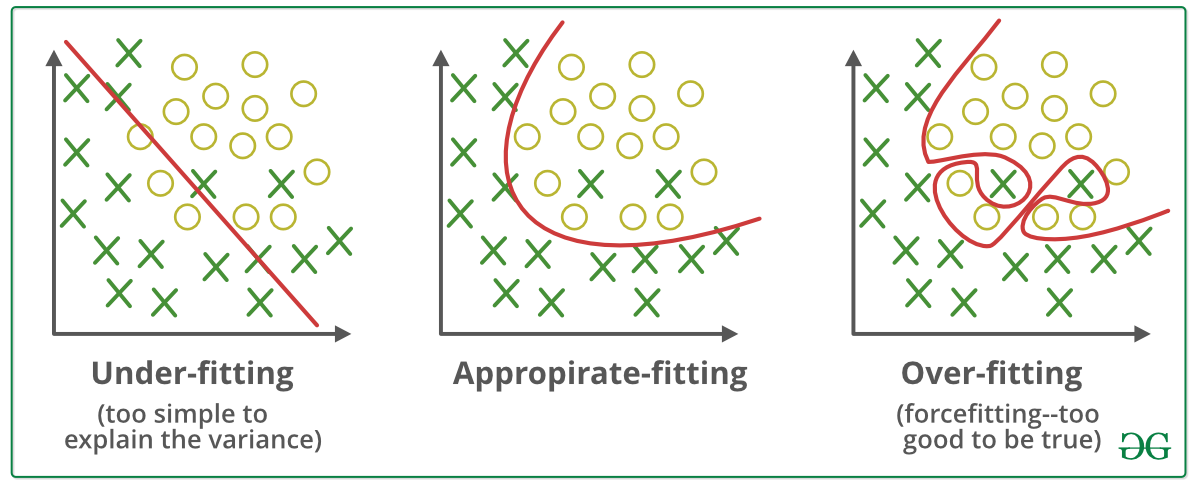

. In other words this technique discourages learning a more complex or flexible model so as to avoid the risk of overfitting. The regularization techniques prevent machine learning algorithms from overfitting. As seen above we want our model to perform well both on the train and the new unseen data meaning the model must have the ability to be generalized.

Regularization is a technique which is used to solve the overfitting problem of the machine learning models. For every weight w. In the context of machine learning regularization is the process which regularizes or shrinks the coefficients towards zero.

This is exactly why we use it for applied machine learning. In other terms regularization means the discouragement of learning a more complex or more flexible machine learning model to prevent overfitting. It is a technique to prevent the model from overfitting by adding extra information to it.

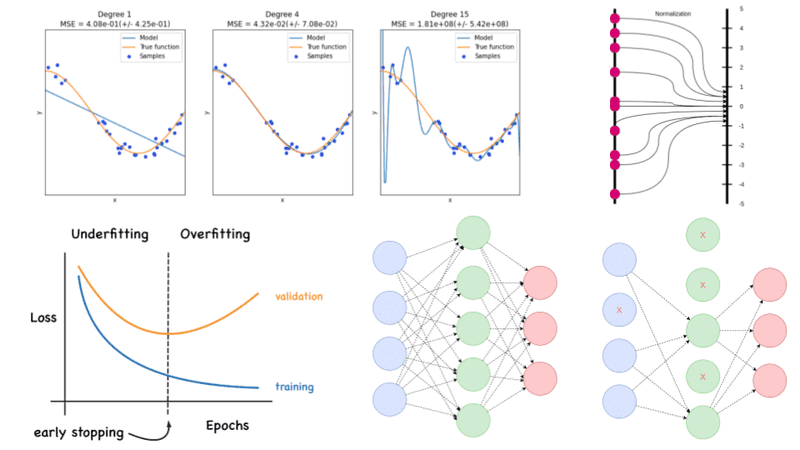

We all know Machine learning is about training a model with relevant data and using the model to predict unknown data. Regularization is essential in machine and deep learning. Overfitting is a phenomenon that occurs when a Machine Learning model is constraint to training set and not able to perform well on unseen data.

It is one of the key concepts in Machine learning as it helps choose a simple model rather than a complex one. A simple relation for linear regression looks like this. In machine learning regularization is a procedure that shrinks the co-efficient towards zero.

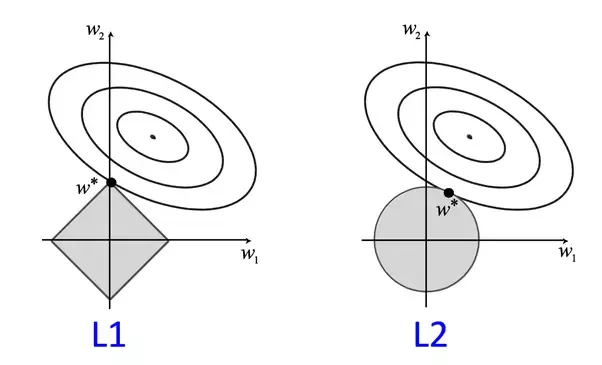

Mainly there are two types of regularization techniques which are given below. In other words this technique discourages learning a more complex or flexible model so as to avoid the risk of overfitting. It is also considered a process of adding more information to resolve a complex issue and avoid over-fitting.

It is a term that modifies the error term without depending on data. Regularization reduces the model variance without any substantial increase in bias. This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero.

Both overfitting and underfitting are problems that ultimately cause poor predictions on new data. When you are training your model through machine learning with the help of artificial neural networks you will encounter numerous problems. It is not a complicated technique and it simplifies the machine learning process.

Regularization is a Machine Learning Technique where overfitting is avoided by adding extra and relevant data to the model. This is an important theme in machine learning. What is Regularization in Machine Learning.

While regularization is used with many different machine learning algorithms including deep neural networks in this article we use linear regression to explain regularization and its usage. Sometimes one resource is not enough to get you a good understanding of a concept. It is possible to avoid overfitting in the existing model by adding a penalizing term in the cost function that gives a higher penalty to the complex curves.

It is done to minimize the error so that the machine learning model functions appropriately for a given range of test data inputs. Regularization refers to techniques that are used to calibrate machine learning models in order to minimize the adjusted loss function and prevent overfitting or underfitting. Regularization in Machine Learning What is Regularization.

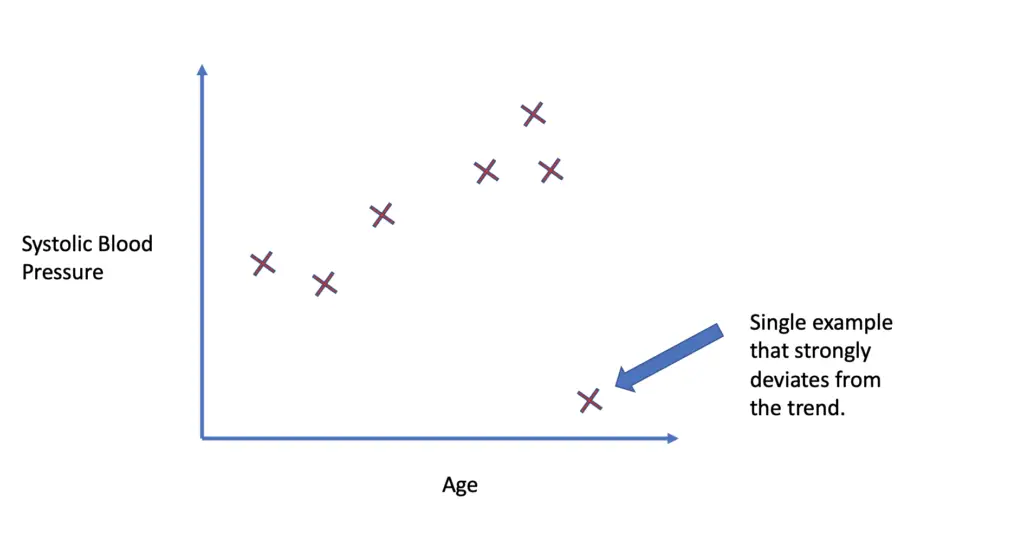

Overfitting is a phenomenon which occurs when a model learns the detail and noise in the training data to an extent that it negatively impacts the performance of the model on new data. Regularization is a technique used to reduce the errors by fitting the function appropriately on the given training set and avoid overfitting. By the word unknown it means the data which the model has not seen yet.

L2 regularization It is the most common form of regularization. This independence of data means that the regularization term only serves to bias the structure of model parameters. It is very important to understand regularization to train a good model.

Regularization is one of the most important concepts of machine learning. This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero. Regularization is an application of Occams Razor.

It penalizes the squared magnitude of all parameters in the objective function calculation. The formal definition of regularization is as follows. The regularization term is probably what most people mean when they talk about regularization.

Regularization helps us predict a Model which helps us tackle the Bias of the training data. Sometimes the machine learning model performs well with the training data but does not perform well with the test data. I have learnt regularization from different sources and I feel learning from different sources is very.

Regularization in Machine Learning is an important concept and it solves the overfitting problem. Regularization is a method to balance overfitting and underfitting a model during training. Setting up a machine-learning model is not just about feeding the data.

For understanding the concept of regularization and its link with Machine Learning we first need to understand why do we need regularization. Regularization is one of the techniques that is used to control overfitting in high flexibility models. Overfitting occurs when a machine learning model is tuned to learn the noise in the data rather than the patterns or trends in the data.

It means the model is not able to. In general regularization means to make things regular or acceptable. A simple relation for linear regression looks like this.

This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero. The ways to go about it can be different can be measuring a loss function and then iterating over.

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

Implementation Of Gradient Descent In Linear Regression Linear Regression Regression Data Science

Deep Learning Language Model Advance Course Univ Deep Learning Ai Machine Learning Machine Learning

Regularization Techniques For Training Deep Neural Networks Ai Summer

An Overview On Regularization In This Article We Will Discuss About By Arun Mohan Medium

What Is Regularization In Machine Learning Techniques Methods

Regularization Understanding L1 And L2 Regularization For Deep Learning By Ujwal Tewari Analytics Vidhya Medium

Learning Patterns Design Patterns For Deep Learning Architectures Deep Learning Learning Pattern Design

Regularization In Machine Learning Geeksforgeeks

Difference Between Bagging And Random Forest Machine Learning Learning Problems Supervised Machine Learning

A Simple Explanation Of Regularization In Machine Learning Nintyzeros

Machine Learning For Humans Part 5 Reinforcement Learning Machine Learning Q Learning Learning

Regularization Function Plots Learning Professional Development Machine Learning

What Is Regularization In Machine Learning

Introduction To Bayesian Networks Data Science Machine Learning Mathematics

Regularization In Machine Learning Regularization In Java Edureka

Regularization In Machine Learning Programmathically

Regularization In Machine Learning Regularization In Java Edureka